It seems fitting that the first article of a blog should layout the foundation of my beliefs. So…

What is?

I think, therefore I am - Descartes

We can be sure that something exists which does the thinking. You might now argue that abstract things like language and mathematics exist. Since a version of them can be part of your consciousness, which exists. But beyond that nothing concrete needs to exist, as thought experiments like Brain in a vat or Boltzmann Brains prove (as they are not falsifiable you can not prove you are not one of those, so therefore you can not prove that anything concrete must exist).

We thus know that this question is unsolvable beyond yourself and abstract concepts which exist within your thoughts.

Why?

1. Analogy/Intuition building

A lot of triple-A games started to include minigames. This could be a card or board game in a tavern for example. Why is that interesting to us? Well consider playing a chess minigame. When you beat a piece – why do you do it?

The naïve answer is: “To win the game”. But then someone might ask: But why do you want to win the game? At which point you could go one level up and argue that, as the player character in the tavern, you want to win the bet placed on the game. Or improve your skills such that you can win such a bet in the future. A follow up might be: “Fair enough, but why do you want to earn money?”. You might of course argue that you can use it in order to buy a certain item, which will help you in your campaign. But then you could still ask: “Why do you want to beat the campaign of this game?”. You might go another level up and argue, that you want to beat this campaign in order to talk with your friends about it in real life.

Continuing to ask “why?” long enough, will eventually lead you to a point where you would have to answer the meaning of life/the purpose of all your actions in your life. At which point some people go one level up and say: “To please my god and get into heaven”. But if we take a step back, we should start to realize: Going one level up is not really solving the problem, it is really just avoiding it.

2. Abstract Reasoning

Any answer to a “why?” question, could again be questioned. Such a chain of reasoning is either

- an infinite chain

- goes in circles or

- ends at some point

In order to build some intuition for this: Consider someone “walks” in order “to walk 1km”. We have an action with a purpose. We could now easily expand this chain by supporting the action of “walking” with the reason: “to walk 0.5km” and support the action of “walking 0.5km” with the goal of “walking 0.75km”, etc. And immediately we get an infinite chain of reasoning. And since neither the infinite chain of reasoning nor the circular reasoning is really satisfying, you might want to ask for a meta-reason for the entire chain/circle. In our example this could be “to walk 1km”.

But then we run into the same problem with chains of meta-reasons. In the end, we will not get to a truly satisfying answer. Now the naïve answer: “To win the game” suddenly sounds much better. If we already know we can get nowhere with our questions, maybe we should not start asking them to begin with.

This might be a flavor of Absurdism. Although I have only skimmed the wikipedia article and watched a video so I am not quite sure.

But if there is no meaning of life,

What do?

The absence of goals allows us to choose our own. And if we are allowed to do so, we might as well choose them according to our preferences. This will be slightly different for everyone.

What is (second time)?

As already argued, we cannot definitively answer this question. But since we now have to choose what we want to do as we reflected on our preferences, it might be helpful to have a model of the world around us so that we can predict the effects of our actions. Since that lets us evaluate them according to our preferences. Note that the evaluation of different actions changes with our model of the world, since it changes the predicted effect of our actions, which influences their evaluation according to our preferences.

With very light assumptions on the preference relation (cf. Von Neumann-Morgenstern Utility Theorem) a utility function can be constructed. Once this utility function is obtained it is easy to see that we are in the setting of Reinforcement Learning (i.e. find the best policy/behaviour, which maximizes this utility function)

No matter the preferences, it is beneficial if the model of the world has a lot of predictive power. Since that will result in valuations of actions closer to the “true” valuation, which would require perfect knowledge about how the world works. So how can we obtain a model with high predictive power?

This question seems similar to “What is?” on the surface. If we know what is, we can use this to predict what will be. The difference is that we can discard thought experiments like the Boltzmann Brain as not useful for predicting future events. Sure, it might be the case that you are such a Boltzmann Brain, but in that case, you will cease to exist in a few moments anyway and it does not matter what you do. And whether or not you are a brain in a vat in a simulated reality or “real” does not matter either, since we are not trying to definitively answer what truly is, but rather how our actions will influence what will be, and whether we like it. And then it does not matter whether the result is simulated or not.

For simplicity (and we will soon touch on how important simplicity is) it might be useful to assume, that your senses do tell you something about the real world. Now that we justified why we can rely on our senses again; we have essentially justified the Scientific Method (hypothesize, predict, test/observe, repeat). Making predictions from existing hypothesis is relatively straightforward, so is testing these predictions.

Further testing by generating predictions which can be tested vs using a well-tested hypothesis (theory) for predictions which interest you (for choosing actions according to your preferences) is known and studied as the exploration vs. exploitation trade-off in Reinforcement Learning

What is not straightforward is generating hypotheses from observations. “What are good hypotheses?” or “what can we learn?” are the questions which Statistical Learning is concerned with. Highlights include the No Free Lunch Theorem, which motivate the intuitive preference of scientists for simple/elegant hypothesis.

We already argued that Mathematics exists so statistical learning’s theory can be relied on.

While I recommend reading up on the “no free lunch theorem”, here is an intuitive explanation for the less mathematically inclined: If you do not assume, that physical laws are independent of space and time, they become exceedingly difficult to test. How can you confirm that some hypothesis is true, if the behaviour can change over time? It is quite hard to disprove a hypothesis which is allowed to change over time. Since that just means that it changed in such a way, that the results you got make sense again.

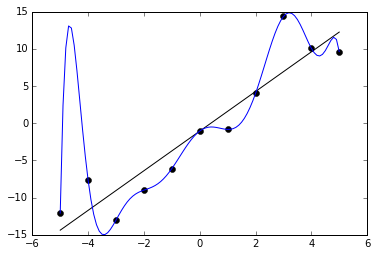

This problem is known as Overfitting and can intuitively understood with pictures like Figure 1 & 2.

| |

|---|---|

| Figure 1: by Chabacano - Own work, CC BY-SA 4.0, Link | Figure 2: By Ghiles - Own work, CC BY-SA 4.0, Link |

This simplicity requirement (which makes hypotheses robust), is the main problem with conspiracy theories. Sure they might explain the same observations, but they require a lot more “twists and turns” and are hard to test, since an observation which does not fit is simply incorporated with more twists and turns. This lack of falsifiability is the same reason, why God is a severe case of overfitting.

The number of twists and turns required to justify an omnipotent, benevolent god in a world with horrible disease is nauseating. And since it does not offer any measurable predictive power, the simpler hypothesis should be preferred.

Should

Where do morals come from in such a nihilistic worldview? Well for once most people prefer it, if a certain set of rules known as morals is adhered to. And since we do not justify preferences and simply view them as axioms, there is not really anything we need to add to that.

But understanding where they come from might help us build a better (simpler?) model of the world, which is desirable as already justified. Adding together the ingredients from Game Theory (evolutionary stable equilibriums and the Folk Theorem) and our scientific evidence that we are in fact a product of evolution, it is immediately apparent that an unspoken contract of not killing each other, etc. is in fact an Evolutionary Stable Nash Equilibrium in a repeated game. So the hypothesis that morals are in fact a Folk Theorem-esque Equilibria (which are enforced by the players) requires no additional assumptions and is as such extremely simple.

It is also a justification why people, which do not have these equilibria baked into their preferences/feelings (psychopaths), would still benefit from adhering to these Morals. Since Equilibria from Game Theory are also optimal for the individual.

A word of caution

While evolution is an optimization algorithm, any intermediate step is generally not optimal. And even in the limit, the result might only be a local optimum. It is often remarkably close to optimal - which is why the entire field of Bionics exists. But there is no guarantee that this is the case.

Similarly, assuming our intuitive Morals/Empathy have to be optimal (i.e. Evolutionary Stable Equilibriums) would likely be wrong. But at the same time, it is probably closer to optimal than we think. And Game Theory is only starting to understand the complex settings required to generate such behaviour. So it is likely a good rule of thumb to assume that our intuitive morals are probably rational in some way.

Trolley Problem

Let us consider the trolley problem for example, which is often used to test the edges of a Moral System. In order to avoid overcomplicated sentences, and since Folk-Theorem-esque Equilibriums are really (unwritten) social contracts. I will refer to this moral system as a social contract with the understanding that there need not be a written contract.

A lot of attention is brought to the detail, that people are reluctant to switch the lever, and are especially reluctant to throw someone over the bridge railing in order to stop the trolley. This is no surprise once we realize that actively causing a death is a greater breach of the social contract, than not preventing a death. This is due to the reason that the contract enables people to feel safe around other people but does not make any guarantee about natural causes. You could never feel save around other people if they might, at any time, throw you under the bus for the greater good. The stress level of everyone would go through the roof. At the same time everyone can and should be more attentive when they move into dangerous places like train tracks. If you would ask people the question whether they prefer to live in a world, where they can feel safe around other people but are a bit more likely to get killed if they put themselves into dangerous places, compared to the other way around, most would pick the first option. Which is why this is the social contract which is enforced.

That said some edge cases do not really have to be decided if they virtually never happen. Since, if the probability of them happening is really low, they will have a negligible impact on the expected utility no matter the behaviour/actions in that situation.

Who is covered?

A lot of moral systems are problematic due to the fact, that they make a distinction between humans, animals and other things. But where are the borders? In the evolutionary progression from other apes where does a human start to become a human and attain coverage of the other set of morals? If the set of morals is simply a nash equilibrium/social contract, then the question whether someone is covered by it is simply a question whether someone is intelligent enough to agree to the terms and conditions of this contract. In other words: Animals are not protected, because they cannot provide us the same guarantees in return. They are not part of the social contract and thus also cannot break it, which is why they are only tried in courts by nutcases.

Corollary: Artificial Intelligence should get these rights as soon as it can agree to this social contract.

Emotions vs Rationality

Since we do not require our preferences to be justified by some reason, our preferences and thus overarching goals will be greatly influenced by our emotions. But in order to avoid stumbling over your own feet, it is useful to evaluate actions rationality, or consider rationally whether they will interfere with your other preferences down the road. In that sense rationality is a tool for modelling the world in order to achieve more desirable outcomes.